While most SEOs strive for the top ranks, occasionally, the inverse is required – deleting URLs from Google. Perhaps your whole staging environment was indexed, sensitive material that shouldn’t have been exposed to Google was indexed, or spam sites uploaded as an outcome of the site being hacked are emerging in Google. In any case, one will want those URLs removed as quickly as possible. We’ll teach readers exactly how to accomplish that in this tutorial.

Reasons for URL Removal

Following are the most typical scenarios in which you need to delete URLs from Google immediately −

- You have duplicate or out-of-date material.

- The staging environment you are using has been indexed.

- Your website has been compromised and includes spam pages.

- Inadvertently indexed sensitive content.

How To Arrange Removals?

If you want plenty of pages eliminated from Google’s index, prioritise them accordingly.

Highest priority − These sites are frequently security-related or contain confidential information. This includes content including personally identifiable information (PII), client data, or proprietary knowledge.

Medium priority − This generally refers to material intended for a specific group of users. Company intranets or corporate portals, member-only material, and staging, assessment, or development platforms are all examples of restricted content.

Low priority − these websites often include duplicate material. Pages served from various URLs, URLs with parameters, and, once again, staging or testing environments are instances of this.

Explore our latest online courses and learn new skills at your own pace. Enroll and become a certified expert to boost your career.

Methods For Taking URLs Off Google

Erase your content

If you have outdated stuff on the site that is no longer helpful, you may erase it. According to the URLs, there are two alternative solutions −

Deploy 301 redirects to the essential URLs on your site if the URLs generate traffic and hyperlinks. Avoid forwarding to unrelated URLs, as they may be considered soft-404 errors by Google. As a result, Google will not assign any value to that redirect target.

If the URLs have no traffic or links, return the HTTP 410 status value to inform Google that the URLs have been permanently deleted. When you utilise the 410-status code, Google quickly removes URLs from the index.

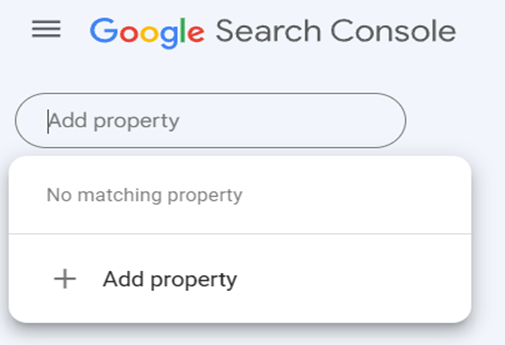

Using Google Search Console

Access the Google Search Console profile and choose the right property.

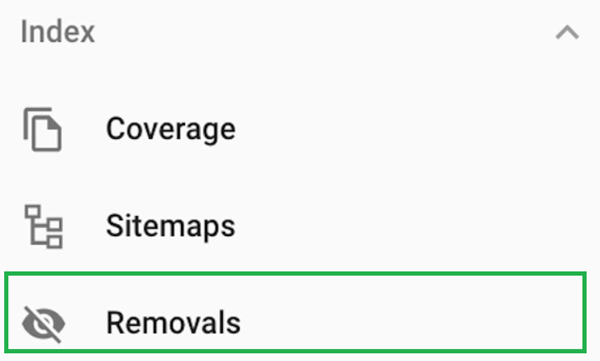

In the right-column menu, choose Removals

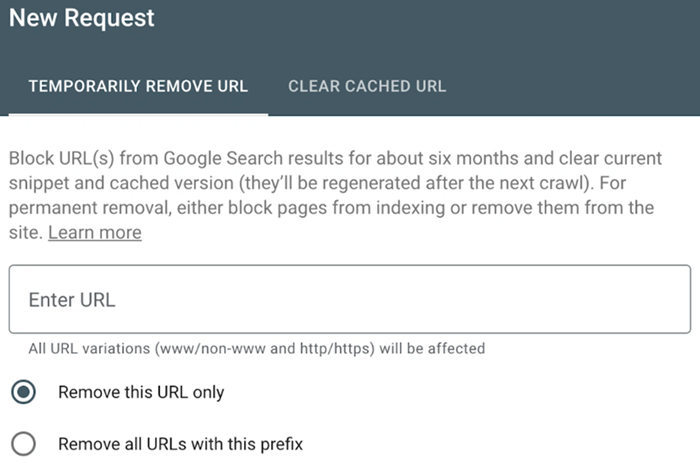

Click the NEW REQUEST button to get to the TEMPORARILY REMOVE URL tab

Select “Remove this URL Only”, input the URL you wish to remove, then click the Next button. The URL will now be hidden for 180 days by Google but remember that the URLs will still be included in Google’s index, meaning you will still need to take additional steps to hide them.

As often as required, repeat the process. If confidential material is in a specific directory, we recommend utilising the “Remove all URLs with this prefix” checkbox to conceal all URLs in that directory at once. If you have a significant number of URLs that don’t have an identical URL prefix, we suggest concealing the ones that appear most frequently in Google.

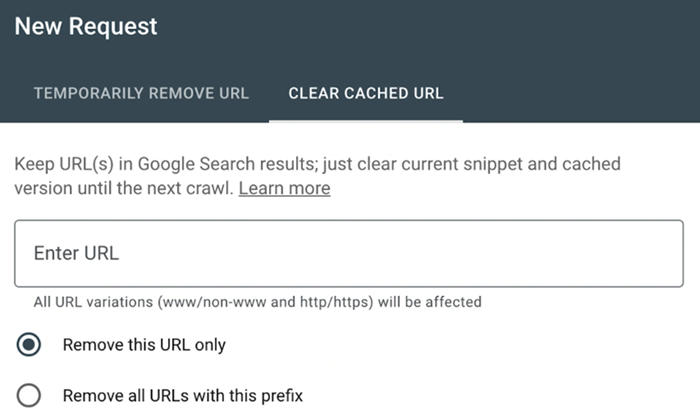

For cached content

- Access your Google Search Console account.

- Choose the right property.

- In the left-column menu, choose Removals.

- Select the NEW REQUEST option.

- Choose the CLEAR CACHED URL tab.

- Select if you’d prefer Google to erase the cache for a single URL or all URLs that begin with a particular prefix.

- Type in the URL and click Next.

Please remember that by utilising the noarchive meta robots tag, you may advise Google not to store cached versions of your pages.

Use the Noindex Meta tag

The noindex meta tag is one of the most often used methods for removing URLs from results pages or preventing them from being indexed.

This is a code piece in the head element of your website’s HTML. It instructs Google not to display the website in search results.

<meta name=”robots” content=”noindex” />

It may say “noindex, follow” instead of “noindex” — both will have the same effect.

If granted access to a website’s HTML code, you may manually add this code to your website or use the X-Robots-Tag HTTP header. A search engine must be allowed to crawl the pages with such tags to be visible. Therefore, make sure they are not blocked in robots.txt.

Canonicalization

It would help if you sorted when you have numerous page versions and wish to assemble signals, like links to a specific version. This usually safeguards the same material when combining various site types into the only URL.

- Canonical tags − This provides a different address as the canonical copy or the one you wish to see. This should be acceptable if the pages are duplicates or relatively similar. When webpages are too distinct, the canonical can be disregarded because it is only a suggestion rather than a requirement.

- Redirects − The most popular redirect used by SEOs is 301, which notifies engines like Google that you need the final URL to serve as the one displayed on the results page. A 302 or temporary redirect indicates to search engines that you intend the original URL to remain in the index and concentrate signals there.

How To Get Rid Of URLs That Are Not On Your Website?

Contact the web admin

Contact the website’s administrators and request them to provide a cross-domain reference to your website with a link or a 301 redirect to your URL, or delete it entirely.

What if the website’s proprietors fail to respond or refuse to act?

You might pursue alternative options if the site operator does not react to your pitches to delete the content. If you have an enforceable right to get the content deleted, such as a copyright claim, then you may approach the site’s web host. They frequently remove information that violates the copyright. You may then utilise the Google ” remove outdated content ” function to get it out of the index and cache.

Eliminate Images

The most straightforward approach to remove photos from Google is to use robots.txt. While unauthorised support for deleting sites was eliminated from robots.txt, disabling image crawling is the correct approach to deleting pictures.

For just one image

User-agent: Googlebot-Image

Disallow: /images/dogs.jpg

For all photos:

User-agent: Googlebot-Image

Disallow: /

Typical Removal Mistakes to Prevent

Here are some frequent removal mistakes to avoid. Consider the following −

- Avoid crawling in the Robots.txt file

- Instead of avoiding scanning the page with a Txt file, it is best to remove it using noindex.

- Use the nofollow tag.

Conclusion

You will wish to remove URLs from Google in several scenarios rapidly. Each case requires a unique strategy; hence, no “one size fits all” solution exists. And, if you’ve been paying attention, you’ll note that most scenarios where you must remove URLs can be prevented.

Forewarned is forearmed!

Leave a Reply